Where infants look can tell us what they know about the world.

As any parent knows, infants cannot readily tell you what they want or need. This also means that they cannot directly express what they know.

For developmental psychologists, who are often interested in what infants know when, this can be pretty challenging. So how do researchers ask infants whether they know the meaning of a word, or if they recognize the language someone is speaking?

Researchers can observe how infants respond when presented with a variety of stimuli! Researchers usually use sounds or pictures as stimuli.

What are the behaviors that can clue researchers in to what infants know? For typically developing infants, language researchers often use two types of methods that both rely on where an infant is looking: looking time methods and eye-tracking methods.

Looking time methods:

Looking time methods can ask questions such as: do babies notice if something changed? To do this, researchers measure the length of time infants listen to or look at a specific stimulus, and then compare overall looking times across stimuli types.

For example, imagine you want to know if infants know that “cat” and “dog” mean different things. You could first show infants a picture of a dog while labeling it (e.g. “dog, dog, dog, dog”). Then, you could switch the picture to a picture of a cat, but continue labeling it as “dog” (which of course, is wrong!). You can then compare infants’ looking times to each type of trial (when the object and word match vs. when the object and word do not match).

If infants know that cats and dogs are not the same, then you would expect them to look longer when they see a cat but hear the word “dog” because this does not match their expectations, compared to when they see a dog and hear the word “dog”, which does match their expectations.

As another example, we can ask whether infants notice when you switch one sound in a word (e.g. they heard “dog” and then it is switched to “dug”), or if we switch the talker (e.g. they always heard a female voice say “dog”, and then it is switched to a male voice saying “dog”). This is a way to look at infants’ expectations about how words sound.

To summarize, looking time methods rely on differences in the length of time infants look at a screen depending on type of stimuli that they see or hear. This methodology is particularly useful for understanding what stimuli infants think to be similar or different.

Eye-tracking methods:

Eye-tracking methods also rely on infants’ looking patterns, but provide more fine-grained information about where infants are looking as well as what they are looking at.

Imagine now that you are interested in whether infants know the meanings of common words, such as knowing what a ‘phone’ and a ‘hat’ are. You could present infants with two images on a screen (a phone and a hat), ask them to look for an object (i.e. “Do you see the phone?” or “Can you find the hat?”), and then measure which image they look at after they hear the question.

If a given group of infants spent more of their time looking at the appropriate image, it would suggest that they know which of the two objects the word refers to.

Of course, infants might look at some pictures more just because they like them more, but researchers have ways to account for these preferences in their analyses.

This type of research can be done using both high- and low-tech methods, both of which are completely non-invasive.

Video caption: A prompt “Where’s the phone?” is spoken after about half-a-second. The purple dot represents where the infant’s eye is looking in real time before, during, and after the prompt.

The Fancy Way

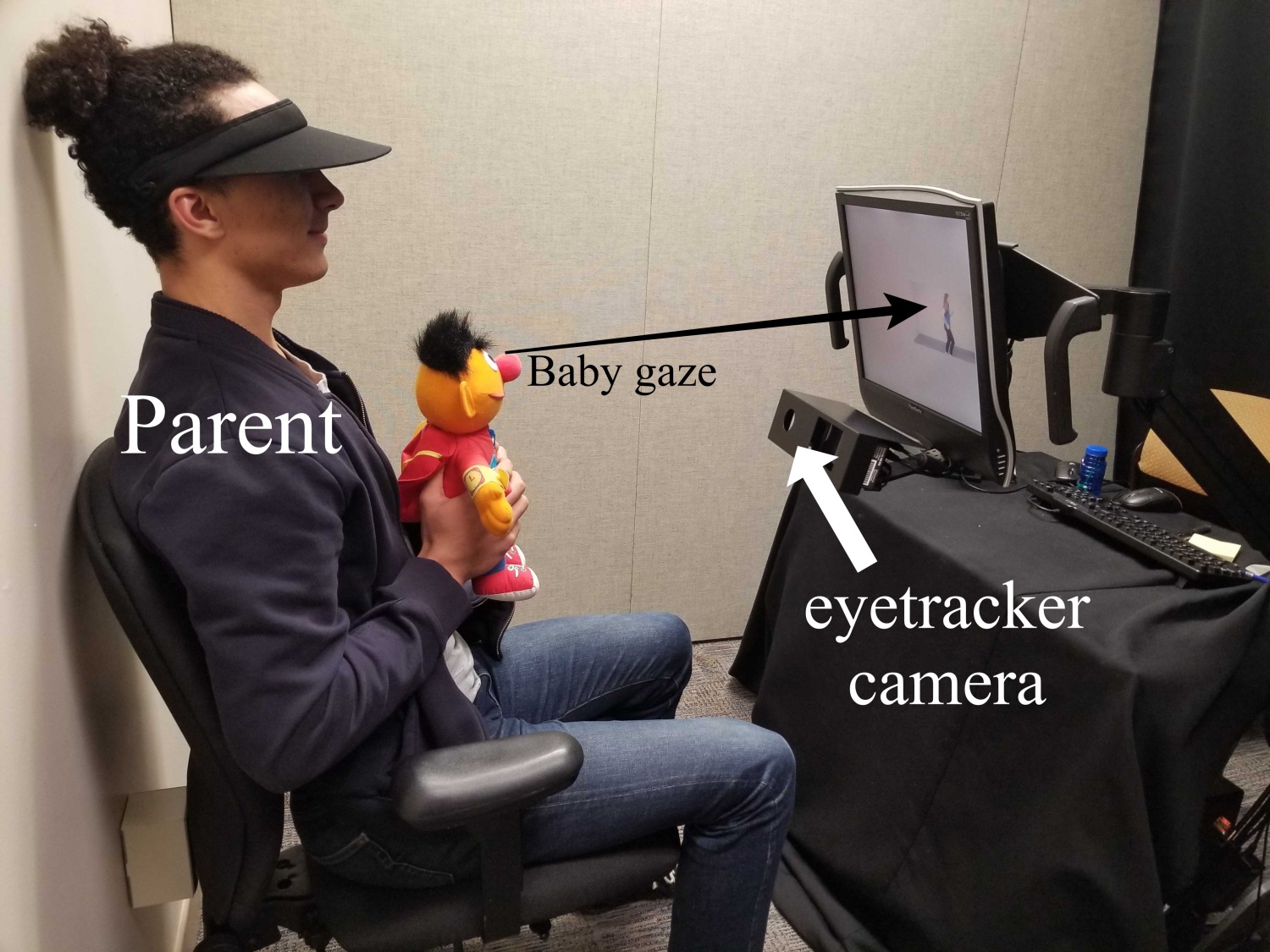

The high-tech methods for eye-tracking work by shining near-infrared light on the eyes and use special cameras to take high-resolution images of where the eyes are looking. This can be done with an eye-tracker, for example anEyeLink. This is great because it automatically tracks where kids are looking, though these fancy systems can be pricey. This way is being used in the picture with the “parent” and Ernie below.

The Old-Fashioned Way

The low-tech (and cheaper) method just points a regular camera at the participant’s face, and later researchers go back and note whether the participant was looking at the left or right side of the screen. This can be done in lots of settings, which is a big plus, but is also very time consuming, as researchers have to go through each video frame-by-frame and determine whether the participant looked left or right.

In Summary:

Eye-tracking methods allow for greater insight into where infants are looking on a screen, allowing researchers to infer different types of information about what infants know.

These methods can be used to find out if infants know the meanings of many different types of words like nouns, verbs, and adjectives.

For example, in 2017, the Bergelson lab published a paper supporting the idea that six-month-old babies understand that some words are more closely related to each other (e.g. foot and hand) than other words (e.g. foot and milk).

Whether by measuring looking time or using eye-tracking, researchers use behavioral methods to investigate what infants know before they can tell us.

Federica Bulgarelli

Author

Federica is currently a postdoc in the Bergelson Lab at Duke. She recently completed a PhD in Cognitive Psychology and Language Science at Penn State working with Dr. Dan Weiss in the Child Language and Cognition Lab.